How This Study Could Help Doctors Diagnose Brain Tumors Faster—and Better

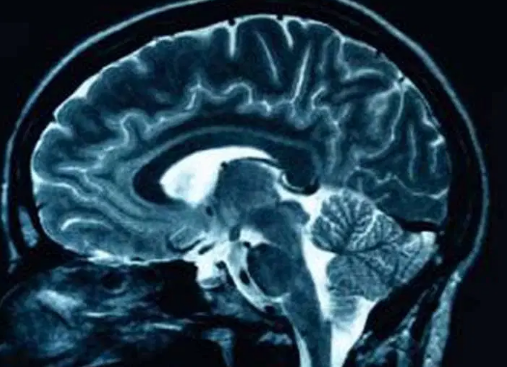

It starts with a scan. A blur of grayscale swirls across an MRI screen. Somewhere in that grainy image could be the beginning of something devastating—or nothing at all. For years, doctors have relied on experience, instinct, and traditional imaging tools to make these calls. But now, artificial intelligence is changing the game.

And not just any AI. A new study introduces a deep learning model that doesn’t just predict brain tumors—it tells you why.

How This Study Could Help Doctors Diagnose Brain Tumors Faster—and Better

Published in Scientific Reports, the study presents a breakthrough in medical imaging: a deep learning system paired with Explainable AI (XAI) to predict brain tumors with 92.98% accuracy. That’s not just high—it outperforms most existing tools and, more importantly, it provides interpretable results that clinicians can trust.

Unlike older AI models that spit out predictions without explanation (the dreaded “black box”), this system highlights the tumor’s shape, size, and location in real-time. Doctors don’t just get a result—they get insight.

The Hidden Risk You’re Missing in Medical AI

Here’s the problem: Most AI systems in healthcare are incredibly smart—but completely opaque. Clinicians can’t see how the algorithm arrives at a diagnosis, which creates a dangerous trust gap. What if it misses something subtle? What if it’s wrong?

This lack of transparency has been a major reason why AI adoption in medical settings has lagged.

The new model tackles this directly by integrating XAI tools like LIME and Grad-CAM, which visually highlight the exact brain regions the AI is using to make its decision. Doctors can now see what the machine sees—and that’s a game-changer.

Only 7% Wrong: A Surprising Stat Behind This AI Model

Let’s talk numbers: The best-performing version of the model, a fine-tuned NASNet Large neural network, hit 92.98% accuracy with just a 7.02% miss rate. To put it simply: Out of every 100 cases, the model correctly identifies tumors 93 times—and shows doctors why it chose that answer.

This is a major leap forward from many existing systems that either struggle with small datasets or operate as inscrutable black boxes.

New Research Reveals 3 Keys to Smarter Brain Tumor Diagnosis

- Better data, better models: The model uses enhanced MRI scans and smart data augmentation to train itself on diverse, high-quality images.

- Powerful AI, now interpretable: By combining deep learning with LIME and Grad-CAM, the model doesn’t just guess—it shows its work.

- Built for the real world: With cloud integration and live validation, doctors can use this tool across hospitals, clinics, or even mobile devices connected via the Internet of Medical Things (IoMT).

From Scan to Clarity: How the System Works

Here’s the life cycle of a diagnosis using this AI system:

- Scan: A patient’s MRI is uploaded.

- Preprocessing: The image is resized, cleaned, and augmented to boost data diversity.

- Prediction: The NASNet model analyzes the image and classifies it as “tumor” or “no tumor.”

- Explanation: LIME and Grad-CAM generate visual heatmaps, showing exactly where the tumor is—and why the AI made that call.

- Decision Support: Doctors review the highlighted images, backed by interpretable data, and make an informed decision.

Why This Matters Now

Brain tumors are rare but deadly. Fast and accurate diagnosis can be the difference between life and loss. Yet many models fail in real-world settings because they:

- Can’t handle messy or limited datasets

- Overfit during training

- Don’t explain their logic

This new model directly addresses these pain points and brings us closer to AI that doesn’t replace doctors—but makes them better.

What’s Next?

The study’s authors are looking to:

- Test the model on larger, multi-site datasets

- Integrate transfer learning and synthetic data to fill gaps

- Compare with newer architectures like vision transformers

But the message is clear: AI in healthcare is maturing—and transparency is no longer optional.

Join the Conversation

- Do you think doctors will trust AI more if it can explain its decisions?

- Could this model work in your healthcare setting?

- How do we balance speed, accuracy, and interpretability in life-or-death decisions?