TLDR! Community Psychology and Article Summarization

How long is your reading list? Mine is obscene. This is a challenge because the written word is still the major way that we share and communicate ideas. For those of us who work in the space of ideas, being able to write and read is the coin of realm.

Our good friend Julia Moore has written for our blog about the tricks that she uses to stay up to date. But could there be other solutions that give us the main points of an article quickly?

For the biennial SCRA conference this year (the gathering of community psychologists), I was asked to participate in a panel on computer-aided solutions to emerging problems. Our work in monitoring monthly community psychology trends caught the attention of the session organizer, Jennifer Lawlor. Because I had recently talked about that work in a presentation at the Global Implementation Conference, I wanted to focus on something else.

I’ve been interested in the idea of article summarization since the early days of PubTrawlr. The general purpose is to condense long blocks of text like news articles into the key ideas. There are two major ways to approach this problem: Abstractive and Extractive Summarization

Abstractive Summarization

Abstractive Summarization takes the pre-established text data, then generates new text based on which words or characters are most likely to occur. As you might guess, abstractive summarization is still in its infancy, because text generation can often lead to strange spellings and expressions. However, I do want to note that I’m not as familiar with GPT-3 powered summarization, which may do a much more effective job.

Extractive Summarization

This brings me to the more commonplace method, Extractive Summarization. This method examines all the sentences in a piece to work out which sentences are “most representative” of all the others.

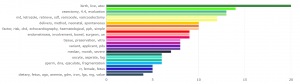

For the SCRA presentation, I took two different implementations of extractive summarization: LexRankr and Textrank. LexRankr is an implementation of the methods described in this article. It looks for the centrality of certain words within a set. Textrank uses a version of Google’s page ranking to determine important words and then their presence in various sentences.

Let’s consider an ideal output. Ideally, we find something that’s useful and comprehensively captures all the information. To get to the second output, I compared the outputs in terms of readability using three readability metrics: Flesch.Kincaid, FOG.PSK, and FOG.NRI. What I found is that TextRank generally produced more readable results, but with the trade-off of processing time, which was about 10 times as long as LexRankr.

But you don’t have to take my word for it. We put an early widget up on PubTrawlr in a new, hidden section we’re calling Davy Jones’ Locker. This is the place we plan to put really early experiments, tests, and other silliness.

The TLDR bot summarizes a few types of articles from select websites (BMC, PMC, and Springer full-text selections.) Also, to be absolutely clear; we don’t own this content. Copyright law can apply to summarization and translations, so for now, this is just an experiment. As such, in its current state, these single article summarizations are not likely to be implemented as a regular PubTrawlr feature. Still, we’ll take feedback and keep playing around.

What’s the advantage of this over an abstract?

My regular and much smarter colleague, Victoria Scott, was able to play around with this method and remarked, “This is really cool. Why would someone use this instead of reading an abstract?” And that is a wonderful question that actually stumped me. The bot is rough, really rough. It’s possible that the traditional structure of scientific articles doesn’t lend itself that well to this method. Let’s break down why.

Think about news articles. News articles generally start with main points, then provide supporting detail. The main points should be easy to find. To the point, extractive summarization does pretty well with news stories. Scientific articles, though, have distinct sections of varying importance. The introduction provides the justification for a question. The methods show how the researchers attempted to answer that question. The results show what they found, and the discussion lays out what it means. There can be a lot of fluff in there. I had a professor once who famously claimed to never read discussion sections.

Keeping all that in mind, there’s two ways to continue the inquiry into this method. Victoria suggested comparing the outputs of this to an abstract to see how they matched point by point. This would get back to our goal of a comprehensive output. Another strategy would be to pull text only from specific sections and do a separate extractive algorithm on each of them. This would be sort of like an automatic abstract generator. The challenge, though, is that full-text articles are encoded (and sometimes fundamentally structured) differently, so getting to a generalized solution is really challenging, and may not be worth the squeeze.

Any other thoughts about how to consume scientific information? Take our PubTrawlr User Survey and get some cool swag to impress your friends and loved ones!