Less words, more action. Comments on Brownson et al.

A recent article is making rounds in the Twitter circles I follow: Brownson et al’s Implementation science should give higher priority to health equity. Because so much of PubTrawlr’s work is about both implementation science AND equity, let’s deconstruct what this article is saying.

A word about equity first. Health outcomes can vary for no reason other than zip code. This is unacceptable, unjust, and immoral.

Implementation science has been tasked with understanding how good ideas get into practice and facilitating the conditions that lead to better outcomes. It’s natural that implementation scientists should be deeply considering how interventions do or do not lead to equitable outcomes.

This idea has been floating around in the background for a while and is starting to show upfront and center. The CDC has finally gotten explicit on the topic, as seen in this statement from early April 2021. The Global Implementation Conference’s theme this year is “Addressing Health Equity.” The biennial community psychology conference is centered around uprooting white supremacy. And now we have this paper,”Implementation Science should…..”. Let’s break some of the salient points into things I like and things I don’t

Things I like!

Nice thing 1: Study what is already happening—more practice-based evidence. “I like this,” isn’t strong enough. I love this. There is so much front-line progress that falls in to the knowledge chasm because it isn’t documented, and certainly not disseminated. The authors cite a pretty powerful example:

“In a study of implementation of mental health services, Aby found three important themes showing how participants experienced implementation: invisibility (e.g., not enough mental health providers of color), isolation (e.g., separation and lack of collaboration among key stakeholder), and inequity (e.g., feeling tokenized or unwelcome)”

That’s not good! Multi-method evaluation (and empowerment evaluation models) can be extremely potent in capturing these stories and making sure that lessons learned are identified. The WE in the World gang does a great job providing evaluation mechanisms to make sure stories are captured.

Nice Thing 2: Design and Tailor Implementation Strategies. One of my talking points going all the back to my old job is that in order to enhance implementation, we need to use the right tools for the job. The ERIC strategies that have been floating around for a few years are nice, but don’t go nearly as far as they should in matching strategies to conditions. This is where my colleagues at The Dawn Chorus Center are moving by identifying strategies from diverse change literature (like business and marketing) that correspond to specific elements of readiness.

To pull the equity component into this, organizations that are “less ready” are less likely to qualify for support. So, if we can tailor strategies to specific readiness stages, then we can help support improved implementation in these organizations, and help facility equity-related interventions.

Things I don’t

Critique 1. Lack of Voice. Something big is missing here: The voice and meaningful participation of community members. Implementation science is by nature a top-down development and dissemination paradigm. Researchers study something, figure out how it works, and then put it in other places. And that’s cool.

But, but, but…

Doing things to a population is not the same as doing things with a population. We need to make sure that community members are equal partners, and potentially drivers of any community-based change effort.

Critique 2. Cutoffs? Brownson et al state. “To identify relevant literature for this article, a review of reviews was conducted using searches for English-language documents published between January 2015 and February 2021.” I can’t for the life of me figure out why people do this.

First, what is it about January 2015 that makes for an appropriate cutoff? Further, if the authors are pulling in literature from one month ago, it’s not like it’s taking them a long time to go through the literature.

Second, why just English? These decisions are more important for an equity-focused article because we must be inclusive in pulling in disparate voices. The only reason I can see is pragmatic; just making the research and writing easier. I get it, but it kind of goes against the central thesis of the article right at the beginning.

Third, to be equitable we have to recognize the continuum of evidence. Equity-based research and practice is not sealed away in peer-reviewed journals. It comes from the vibrant policies and practices put forth by governments and community-based organizations alike.

Critique 3. Equity-related metrics can be exclusionary. I generally find that implementation science worships at the altar of measurement. I get it. If we don’t understand the conditions on the ground, we can’t tell if we have a problem and/or a good solution. And I get that there’s an equitable measurement desert. PubTrawlr found 220 articles on the topic, and these tended to cluster mostly around content areas (as seen in the network plot below).

The problem is that evaluation can be burdensome and resource-intensive. Even reporting on metrics can be challenging from a surveillance capacity, which can exclude the communities and organization with it. This creates blinds spots. Friends of the Future Historian at NHJI have written eloquently about how this creates a particular problem for philanthropy. Okay, so maybe the solution is to develop automatic data collection methods via web scraping and other OSINT paradigms so evaluation becomes an automatic process? I personally like this idea a lot and have done some research on how to pull more effective community-based information from tweets (for example.)

However, we then get into another equity-related problem where we take away the ability of communities to own their own data and tell their own story. Equitable measurement is not just about invariance or other psychometric properties. It’s about ensuring that those most impact by a policy or intervention are able to articulate those impacts, in practical and emotional terms.

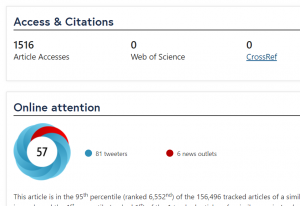

Critique 4. Focus on equity in dissemination efforts. Nothing inherently wrong with this, but the authors don’t go nearly far enough. We have to make sure that dissemination products are broad, have an adequate reach, and are designed for end-users. To be a bit unfair, let’s check out this article’s metrics as of March 26th.

That’s really not going to cut it.  Equity-related practitioners really need to rethink how to get materials out, but the peer-reviewed literature may not be the appropriate way to reach a large number of people.

Equity-related practitioners really need to rethink how to get materials out, but the peer-reviewed literature may not be the appropriate way to reach a large number of people.

It’s an okay article, but let me take a stab at retitling: